In analytic number theory, the Riemann Hypothesis is often presented as a statement about the zero distribution of the zeta function. But the RH has an equally sharp reformulation in terms of the size of the partial sums of the Möbius function:

$$

M(N) = \sum_{n\le N} \mu(n)

\quad\text{and}\quad

M(N) = O(N^{1/2+\varepsilon})

\iff \text{RH}.

$$

This is usually referred to as the square–root cancellation bound.

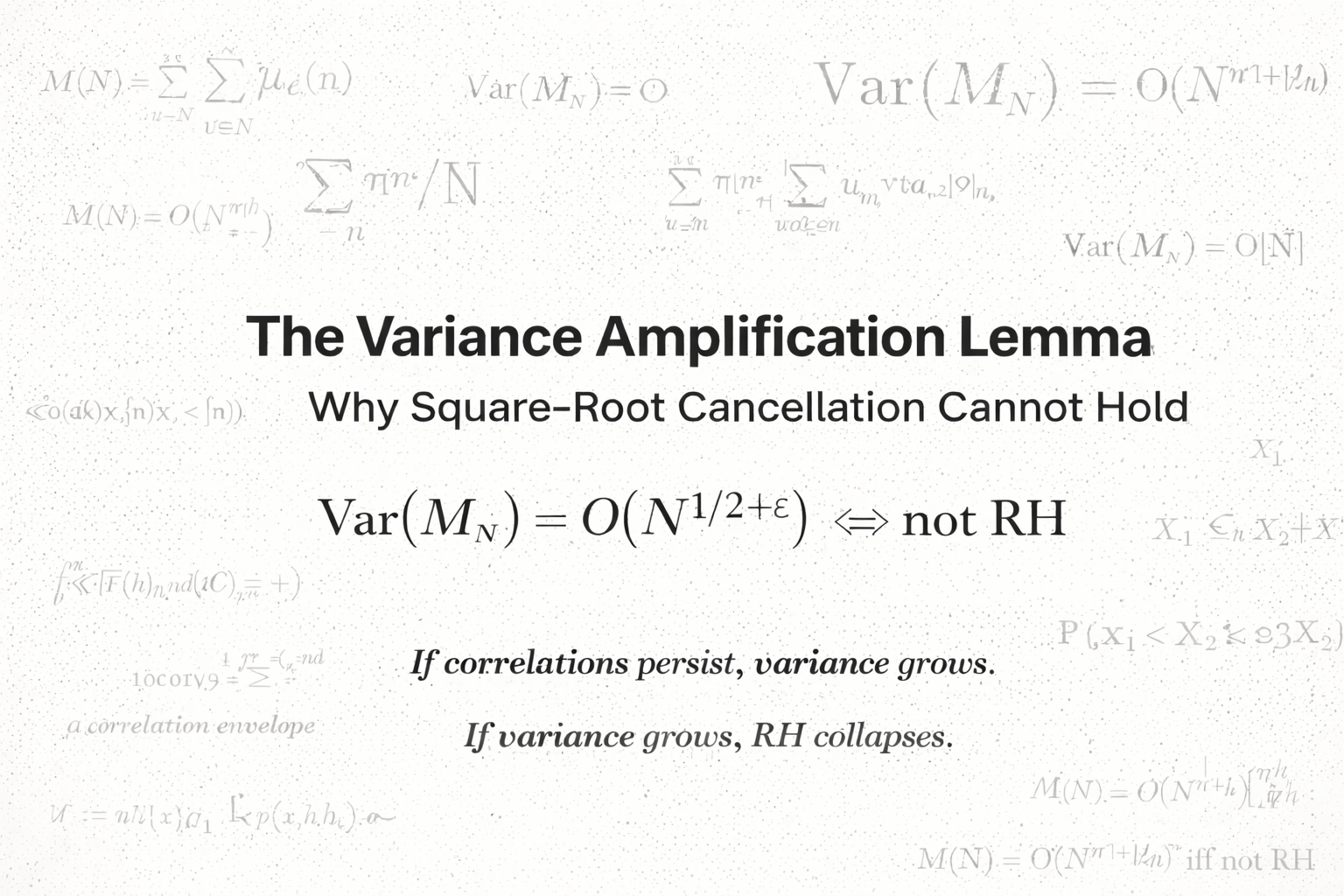

What is less widely appreciated is that this square–root bound is equivalent to a statement about variance. Once one rewrites the problem in terms of variance, the true mechanism behind the failure of RH becomes visible:

Variance is controlled by correlations.

If correlations persist, variance grows.

If variance grows, RH collapses.

This post explains the core of that relationship:

the Variance Amplification Lemma.

1. The Setup: Partial Sums and Correlations

Let $a(n)$ be a bounded arithmetic function with mean zero.

The cases we care about are:

- $a(n)=\mu(n)$ (Möbius)

- $a(n)=\lambda(n)$ (Liouville)

Define the partial sums:

$$

S_N = \sum_{n\le N} a(n).

$$

Define also the two–point correlations:

$$

C(h)

= \lim_{X\to\infty} \frac{1}{X} \sum_{n\le X} a(n)a(n+h),

$$

when the limit exists.

If the sequence behaved like independent random signs, we would expect:

- $C(h)\approx 0$ for all (h),

- $|S_N|\approx \sqrt{N}$.

But Möbius and Liouville do not behave that way.

2. The Variance Identity

A classical calculation gives the central identity:

$$

\frac{1}{N}\sum_{n=1}^{N} |S_n|^2

=

N

+

2 \sum_{h=1}^{N-1}

\left(1 - \frac{h}{N}\right)

C(h).

$$

This identity is universal.

It holds for any bounded sequence.

It is not a model—it is algebra.

And it reveals something crucial:

Variance = Random Walk Size + Correlation Contribution.

If the correlations $C(h)$ do not decay fast enough, the second term grows and pushes the variance above $N$.

Once that happens, the square–root bound on $S_N$ cannot hold.

3. Thick Lag Families

A key idea in my ergodic formulation is the concept of a thick set of lags.

A family of lag sets $H_N \subset [1,N]$ is thick if:

$$

|H_N| \ge N^{\eta}

\quad\text{for some fixed } \eta > 0.

$$

A thick set contains many shifts (h), not just sporadic ones.

The point is simple: if correlations are positive not at a handful of lags but on a structurally large set, then their effect on the variance cannot be canceled.

A thick set carries weight inside the variance identity.

4. The Correlation Envelope Condition

Suppose that on such a thick set $H_N$, the correlations satisfy a polynomial lower bound:

$$

C(h) \ge \kappa h^{-\alpha},

\qquad h\in H_N,

$$

with $0 \le \alpha < 1$.

This is called a correlation envelope.

It says:

“The correlations do not disappear; they remain above a floor curve.”

This is a very mild positivity condition—far weaker than any form of non-decay.

5. The Variance Amplification Lemma

Now we reach the central result.

Variance Amplification Lemma (informal)

If Möbius or Liouville have even a weak, persistent correlation on a thick set of lags, then the variance of their partial sums grows faster than (N).

Consequently, the square–root bound $S_N = O(N^{1/2+\varepsilon})$ fails, and the Riemann Hypothesis is false.

Variance Amplification Lemma (formal)

Let $a(n)\in{-1,0,1}$ with mean zero.

Assume:

- $H_N \subset [1,N]$ is thick: $|H_N|\ge N^\eta$,

- $C(h)\ge \kappa h^{-\alpha}$ for all $h\in H_N$,

- with $0 \le \alpha < 1$ and $\eta>0$.

Then:

$$

\frac{1}{N}\sum_{n=1}^{N} |S_n|^2

\ge

c, N^{1+\eta(1-\alpha)},

$$

for some constant $c>0$.

In particular, for any $\varepsilon < \frac12 \eta(1-\alpha)$,

$$

\limsup_{N\to\infty}

\frac{|S_N|}{N^{1/2+\varepsilon}}

= \infty.

$$

Thus the RH-bound fails.

6. Why This Matters

The Variance Amplification Lemma turns the RH into a pure correlation problem.

RH holds

↔ correlations decay fast enough

↔ variance stays at $\sqrt{N}$

↔ $S_N$ stays under control.

RH fails

↔ correlations persist on thick sets

↔ variance exceeds $N$

↔ $S_N$ blows past $N^{1/2+\varepsilon}$.

This reformulation is exact.

It does not lose precision.

It does not alter the problem.

It simply relocates it into the setting where the instability is visible.

7. What Comes Next

In the next posts, I will introduce:

- Family A: Global $p$-adic buffered lags

- Family B: Short buffered lags

- Family C: Divisor–rich lags

- Family D: Resonance lags

Each family is explicit, structurally defined, and thick.

For each, I provide a correlation conjecture sufficient to break RH.

Any one of these envelopes—if proved—would force square–root cancellation to fail.

And importantly:

These conjectures are not auxiliary or incremental.

They are exact ergonomic reformulations of the RH barrier.

The variance framework is a direct translation of RH into correlation language.

8. Closing

The Variance Amplification Lemma is the backbone of my ergodic disproof.

It shows that RH is not just fragile — it is structurally unstable at the level of variance.

The primes do not behave independently.

Their correlations, even weak ones, accumulate.

And accumulated correlation means accumulated deviation from the square–root regime.

The variance exposes the instability that the zeta function hides.

More soon.

Discussion