In classical randomized linear algebra, methods like CountSketch achieve remarkable efficiency by replacing deterministic projections with random hashing. Instead of carefully constructing orthogonal transformations, CountSketch uses random sign flips and hash functions to compress high-dimensional data while approximately preserving inner products. This probabilistic “shortcut” allows algorithms for regression, low-rank approximation, and streaming computation to run in linear time and constant memory, sacrificing only a small and well-controlled amount of accuracy.

But efficiency through randomness has a hidden cost: it ignores structure. When applied to tensors—multiway data arrays that represent coupled interactions across modes—random sketching often re-introduces redundancy. Repeated updates cause rank growth, expanding the tensor core even when no new information is present. Tucker decompositions, while powerful, suffer from this phenomenon: every time a new direction enters the system, rank inflation accumulates.

My recent work reframes this tradeoff through commutator diagnostics. Instead of applying randomization uniformly, I use algebraic quantities—projector commutators and principal angles—to detect whether two tensor subspaces truly introduce new information. If they commute (i.e., share structure), no randomization is necessary. If their commutator is large, then a randomized sketch (CountSketch, Gaussian projection, or learned hashing) is selectively applied to capture the new directions efficiently.

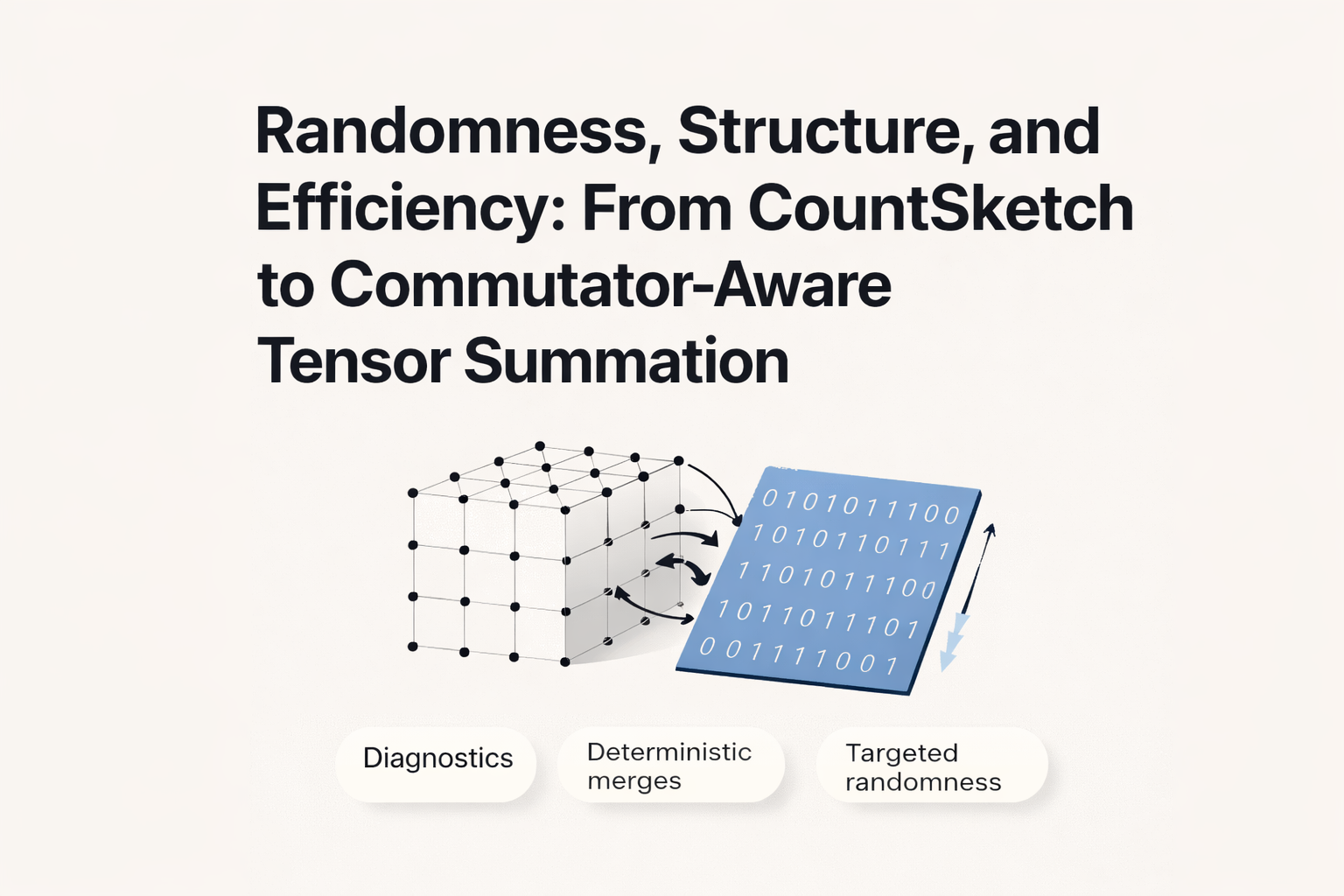

This strategy forms the basis of the Commutator-Aware Tucker Summation (CATS) algorithm.

CATS introduces three main principles:

- Diagnostics: Algebraic commutators identify when subspaces conflict.

- Deterministic merges: When commutators vanish, merge deterministically without sketching.

- Targeted randomness: Apply sketching only in non-commuting regimes, with bounded sketch size to control rank growth.

In effect, CATS merges the statistical efficiency of random sketching with the geometric discipline of algebraic tensor analysis. It treats randomness not as a default, but as a controlled response to non-commutativity.

This viewpoint connects the worlds of randomized linear algebra and categorical tensor theory. It suggests that efficiency can emerge not only from probability distributions but from diagnosed algebraic necessity—a bridge between learning-augmented methods and symbolic tensor geometry.

Upcoming results will formalize these ideas, providing commutator-based bounds for Tucker rank growth and demonstrating budget-aware sketching that matches or exceeds state-of-the-art performance while maintaining interpretability and memory efficiency.

Author Note

Written by Jasmine Burns, founder of JTPMath and creator of the Burns Law and Commutator-Aware Tucker Summation (CATS) framework. This post connects ideas from randomized sketching, tensor analysis, and categorical algebra to establish a new foundation for adaptive dimensionality reduction.

Further Reading

- A. Vakilian et al., Learning-Augmented CountSketch, Virginia Tech CS Colloquium (2025).

- D. Woodruff, Sketching as a Tool for Numerical Linear Algebra, Foundations and Trends in Theoretical Computer Science, 2014.

- J. Burns, Burns–Smith NSF Research Proposal (2025) — Commutator-Aware Tensor Framework for Adaptive Tucker Addition.

Discussion