I. Framing the Interface

- LLM as Partner, Not Oracle:

Treat the model as a collaborator in reasoning, not a replacement for intuition or proof. - Session Structure:

Start each session with a clear research question or field goal.

Use the AI for expansion (generation, brainstorming, translation) and compression (refinement, error-checking, structuring).

II. Input Optimization

- Precision Prompts:

Ask multi-step, context-rich questions.

Provide relevant definitions, background, or notation in the initial prompt. - Iterative Dialogue:

Treat each AI reply as a draft to refine—probe, clarify, and re-prompt for increased accuracy or structural alignment.

III. Authorship and Control

- Maintain the Human Voice:

Use your own language, intuition, and style throughout.

Edit AI outputs directly; never present unedited AI prose as final. - Decouple AI Output from Final Form:

Each result, proof, or derivation generated by the AI should be checked, rewritten, and placed in your own narrative framework.

IV. Structural Use-Cases

- Live Derivation:

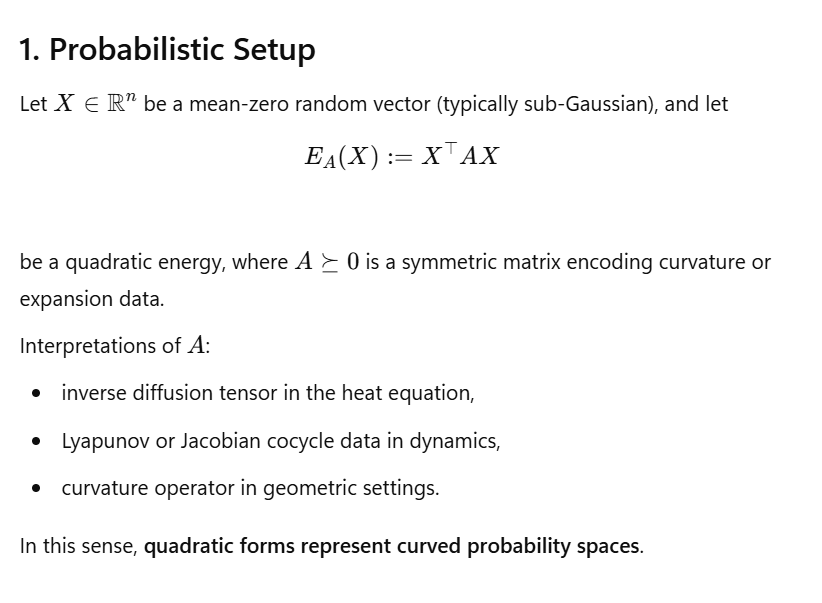

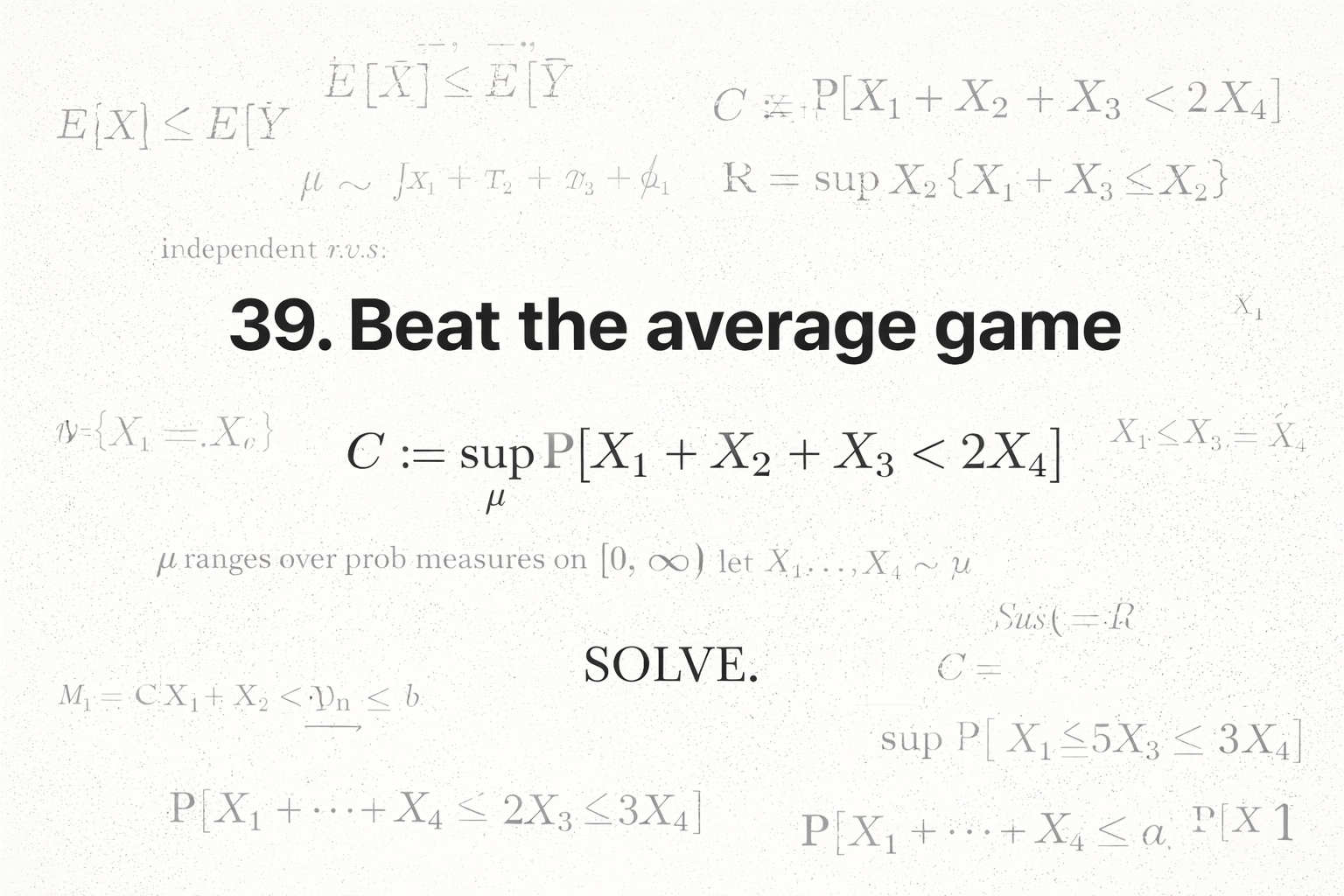

Use AI to expand intermediate steps in proofs, especially for routine calculations or checking known theorems. - Field Mapping:

Employ the model to cross-link concepts across algebra, topology, analysis, and physics. - Meta-Translation:

Ask for explanations in multiple registers—formal, intuitive, visual—to find the resonance that fits your own style.

V. Documentation & Reproducibility

- Timestamp and Archive:

Save significant AI-generated insights as annotated PDFs, Markdown files, or blog entries, dated and contextually labeled. - Keep Source Chains:

Record the input prompt, AI reply, and your post-edits for major results; this builds provenance and enables reproducibility.

Disclosure Clause:

Add a methods note:

“This work was produced in active collaboration with a large language model under the direction of the author; all results have been independently verified and integrated into the author’s narrative voice.”

VI. Limitations and Pitfalls

- Don’t Over-delegate:

Use AI for scaffolding and pattern expansion, but never for final theorem selection, conjecture claims, or the main proof step. - Spot-Check for Hallucination:

Systematically check all claims, especially in deep technical sections.

VII. Future Expansion

- Community Sharing:

Share protocols and insights—help others see how to get from “prompt” to “publishable result.” - Iterate the Method:

As new model capabilities appear, re-examine which parts of your process benefit from AI augmentation and which require pure human insight.

Discussion